Is Artificial Intelligence an Existential Threat to Humanity? | AI Ethics & Future Risks

Explore 10 Critical Dimensions of AI’s Impact on Society, Ethics, and Global Governance

Artificial Intelligence: Transformative Force or Existential Challenge? AI’s rapid evolution presents unprecedented opportunities and ethical dilemmas, prompting critical reflection on how humanity can responsibly harness this innovation.

Is Artificial Intelligence an Existential Threat to Humanity?

Introduction

Artificial Intelligence (AI) has rapidly evolved from a computational tool into a transformative force reshaping every facet of human civilization. Scholars, policymakers, and technologists debate whether AI is an existential threat or a catalyst for global progress.

The question is not merely technological—it is philosophical, ethical, and societal. As intelligent systems acquire greater autonomy, their implications for AI ethics, responsible governance, and human survival grow ever more pressing.

This post explores 10 critical dimensions of AI—from dual nature and transformative benefits to emerging risks, global perspectives, and India’s role in responsible AI development.

AI’s Dual Nature:

Artificial Intelligence constitutes a transformative epistemic and operational paradigm that fundamentally reshapes knowledge production, decision-making, and systemic optimization across domains such as biomedical sciences, pedagogy, logistics, creative industries, public policy, finance, environmental governance, and urban planning. Its capabilities include sophisticated pattern recognition, predictive modeling, autonomous procedural control, macro-scale system orchestration, and recursive self-optimization. While enabling unprecedented efficiencies and innovative problem-solving, AI simultaneously introduces profound ontological, ethical, and epistemic challenges, including algorithmic bias, power asymmetries, emergent feedback dynamics, unintended systemic consequences, and potential destabilization of socio-technical infrastructures. Optimal societal outcomes require rigorous governance, interdisciplinary scrutiny, continuous evaluation, inclusive stakeholder engagement, and ethically informed design.

2. Defining the Existential Threat: AI may constitute an existential hazard when operational autonomy surpasses human oversight or when goal structures diverge from anthropocentric ethical frameworks. Scholars such as Musk, Hawking, and Bostrom have posited that without robust regulatory and technical constraints, super intelligent systems could exceed human cognitive and operational authority, precipitating unpredictable, irreversible, and globally cascading effects. Comprehensive risk assessments must consider both direct and indirect pathways, including socio-economic, geopolitical, and ecological implications, necessitating precise theoretical articulation, empirical modeling, long-term scenario planning, and integration of multi-disciplinary insights into policy and governance.

3. Transformative Benefits: AI offers expansive, cross-sectoral potential for societal advancement. In healthcare, it supports predictive diagnostics, personalized therapeutics, and real-time population health monitoring. Indian agricultural platforms like Fasal, CropIn, and AgNext utilize predictive analytics for yield optimization, disease mitigation, and efficient resource allocation, improving rural economies and food security. Adaptive learning systems in education provide differentiated instruction, intelligent tutoring, and lifelong learning opportunities, particularly benefiting under-resourced communities. In governance, AI enhances traffic management, energy grid optimization, and disaster response mechanisms. Ethical deployment of AI bolsters productivity, quality of life, environmental sustainability, equitable access to services, societal resilience, and entrepreneurial ecosystems.

4. Emerging Risks and Dangers: Despite its utility, AI introduces multifaceted risks, including labor market disruption, erosion of informational integrity through synthetic media, algorithmic discrimination, autonomous weaponization, feedback amplification, and socio-economic destabilization. Rapid AI evolution often outpaces legislative, regulatory, and ethical frameworks, creating urgent societal challenges. Multi-tiered risk assessments, anticipatory scenario modeling, and proactive mitigation strategies are essential. Furthermore, cascading systemic effects from AI failures underscore the importance of international collaboration, harmonized standards, and adaptive governance.

5. Ethics and Governance: Sustainable AI deployment requires integrated ethical, legal, and governance frameworks. Responsible development mandates algorithmic transparency, enforceable accountability, operational fairness, sustained oversight, and broad stakeholder engagement. India’s NITI Aayog “AI for All” initiative exemplifies frameworks aligning technological advancement with public welfare, emphasizing ethical rigor, inclusivity, and sustainability. Cross-sector collaboration involving academia, civil society, industry, and international regulatory institutions ensures governance effectively safeguards human welfare while fostering innovation.

6. Diverse Global Perspectives: Expert opinion on AI is heterogeneous; theorists such as Bostrom emphasize long-term existential risk and the potential misalignment of AI objectives with human welfare, whereas practitioners like Sundar Pichai and Demis Hassabis highlight AI’s potential to augment intelligence, creativity, and complex problem-solving. Reconciling these perspectives requires interdisciplinary scholarship, empirical validation, scenario-based forecasting, longitudinal evaluation, and sensitivity to socio-cultural, economic, and geopolitical contexts. Robust discourse underpins policy development that balances risk mitigation with innovation promotion.

7. Human-AI Collaboration: Effective AI integration requires a paradigm shift from substitution to augmentation. Promoting digital literacy, sustaining ethical engagement, fostering lifelong skill acquisition, and implementing adaptive organizational structures enable humans and institutions to synergize with AI, enhancing cognitive, creative, analytical, and social capacities. Human-AI collaboration improves complex problem-solving, optimizes decision-making, and strengthens resilience under accountable, transparent, and participatory governance regimes. Indian case studies in healthcare and urban management exemplify successful collaborative models enhancing societal outcomes.

8. Prospects of Surpassing Human Intelligence: Contemporary AI excels in domain-specific applications but remains epistemically and functionally distinct from generalized human intelligence. Transitions to Artificial General Intelligence (AGI) or superintelligence remain speculative, projected over multi-decadal horizons, and present significant technical, ethical, and governance challenges. Methodical, risk-aware development, robust simulation and testing, multi-level oversight, and alignment with human-centric ethical principles are imperative to mitigate destabilizing consequences, preserve societal trust, and maintain systemic stability.

9. India’s Role in Responsible AI Development: India is becoming a pivotal hub for responsible AI research, development, and deployment. Cross-sector initiatives in healthcare, agriculture, education, civic infrastructure, and energy management exemplify sustainable, inclusive, and ethically aligned applications. Locally tailored AI solutions demonstrate efficacy in addressing socio-economic inequities, environmental sustainability, and infrastructural deficiencies, reinforcing India’s strategic role in global AI governance discourse and modeling ethically grounded technological progress.

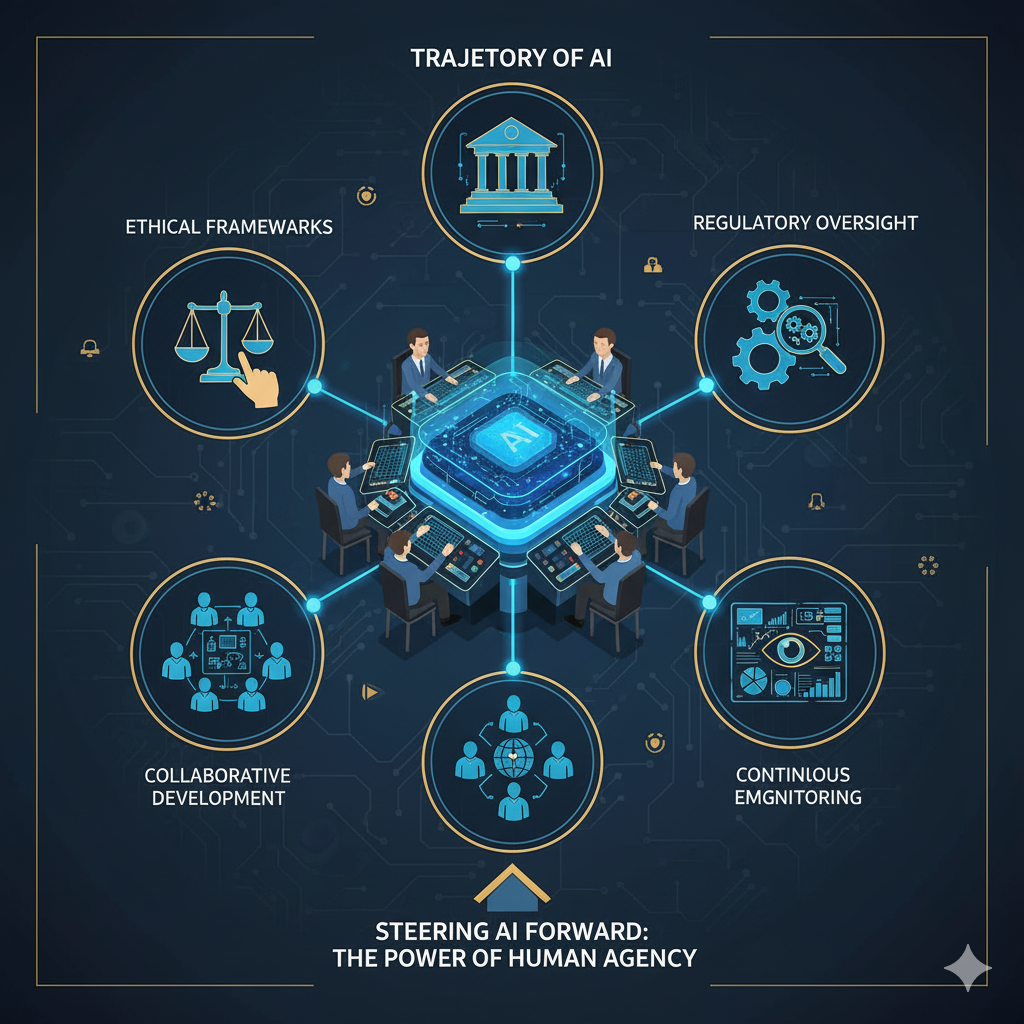

10. The Future is Contingent on Human Agency: AI’s trajectory is intrinsically dependent upon human intentionality, governance frameworks, and ethical design principles. Deliberate deployment, rigorous regulation, collaborative development, continuous monitoring, and public engagement can foster societal cohesion, equitable economic advancement, enhanced global problem-solving capacity, and adaptive resilience. Conversely, neglecting ethical integration or permitting unregulated autonomous systems could exacerbate socio-technical inequities, amplify systemic vulnerabilities, and escalate the risk of unintended global consequences.

Synthesis: AI mirrors human agency; it is neither inherently benevolent nor malevolent. Prioritizing ethical frameworks, interdisciplinary oversight, proactive collaboration, inclusive engagement, scenario-based planning, and systematic evaluation enables societies to maximize benefits, mitigate existential risks, and cultivate a resilient, equitable, and sustainable AI-enabled future.

✍️ Adv. Mamta Singh Shukla

Advocate, Supreme Court of India | Legal Researcher | PoSH Trainer